1. Overview of Generative AI

A class of artificial intelligence methods known as “generative AI” is capable of producing original content. This can incorporate music, graphics, text, and other media. Generative AI generates new data instances that replicate the features of the training data, in contrast to classical AI, which concentrates on identifying patterns and generating predictions based on input data. Constating to the Discriminative models, where the focus is based upon just separating the different categories.

2. Generative AI Applications

Text generation (e.g., GPT-3): producing text that resembles human language for use in narrative composition and chatbots.

- Image generation: using GANs like StyleGAN to create realistic images from scratch.

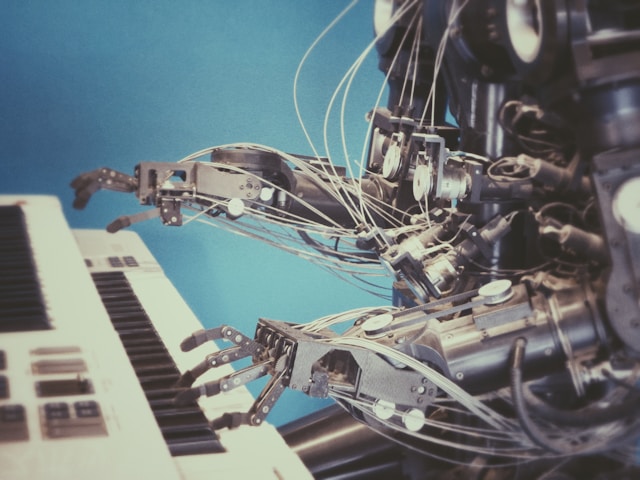

- Music Generation: Writing original works of music.

- Video Generation: Content creation for videos.

3. Important Theories and Models

3.1. Models that Generate

GANs, or Generative Adversarial Networks:

(a) Generator: Produces pseudo data. This is our mafia; we will be explaining about it in a while.

(b) Discriminator: Assesses whether the data is real. This is our Police; who tries to control the counterfeiting and checks the circulation of the pseudo data.

In a game-theoretic situation, these two elements cooperate to raise the caliber of created data. We may also think GAN as like Generators are something like a mafia producing the counterfeited notes while the Discriminators are something which are trying to tackle all those Generator generated counterfeited notes: we may think it something like a police and mafia kind of combo and the overall objective of this system is to control the cash counterfeiting.

VAEs, or variational autoencoders: Employs architecture of encoder-decoder.

(a) Data is transformed into a latent space representation by an encoder.

(b) Data is generated from the latent space by the decoder.

In a nutshell, we have these two pieces used predominantly where we have the entire focus revolving around a latent space representation. The core idea is how we are transforming the core latent representation: if converted into it – we call it encoder structure and the vice versa will be called the decoder.

There has been a shift in the way transformers have been used in the NLP models. We were having our core works starting from basic Bag of words-based models to the recent one having transformers architectures. In the middle of this evolution, there have been significant models worked like RNN (Recurrent Neural Network) and LSTM (Long Short-Term Memory). There was a paper of “Attention is all you need” which introduced the concept of attention, which led to the rapid development of attention driven transformer architectures. It was all about focusing upon the relevant items where we brough to put forth our attention and this attempt helped to streamline and understand how we will be having a lot of improvement coming in terms of the many tasks by just focusing upon what was pivotal.

There has been a major breakthrough from LSTM to Bidirectional LSTM. LSTM were primarily sequence driven architectures focusing upon the core sequence-to-sequence model whereas the major changes in the way of bidirectional LSTM was the contextual learning of sequences moving in the direction of past and future appearing token context. It was utilizing the two LSTM architectures which helps to maintain the contexts of past sequence of tokens as well as the another maintaining the future sequence of tokens.

Transformer architecture was having core of its essence on focusing upon parallel processes and long-range dependencies. It has given significant improvements in training speed and model performances. It is also the core of encoder-decoder architecture (as explained earlier). There have been two major components named self-attention (each token in the input sequence establishes relationship with the other tokens) and positional encoding (since we don’t see any sequential processing in the transformers so we just token embedding to retain positional information). There is also a very famous concept named multi head attention which takes care of models’ ability to take care of various aspects of the data.

Bert is just an encoder-based model while there are other decoder based models like GPT based models eg. GPT2 etc which are core of the nature of generating the responses as what we have discussed about how the latent space-based text generations are being produced.

Converters [Primarily Encoder and Decoder based]:

(a) Bidirectional Encoder Representations from Transformers, or BERT, is mostly utilized for comprehending linguistic context but can also be customized for jobs involving generation.

(b) GPT (Generative Pre-trained Transformer): built using extensive corpus of textual data.

Optimized for particular tasks such as text production.

There are two core utilities how BERT works within: NSP and MLM.

By combining MLM with NSP, BERT acquires a thorough understanding of language, encompassing both fine-grained token-level context and coarse-grained sentence-level interactions. This dual-objective pre-training enables BERT to perform exceptionally well on a wide range of downstream NLP tasks after fine-tuning.

There are so many models encompassing like BERT to get handled the tasks in a similar fashion. There are various kind of models based upon BERT especially like finance, retail and such allied industries. There are also quite many models which are based upon BERT:

(a) Roberta was such a model handling larger sequences and much more data within.

(b) There was distilbert as well, which was solely utilized to get the lighter and smaller version of BERT without much compromising upon the performance.

There were many other variants of BERT like TinyBERT, XLM – R(Cross Lingual Model – RoBERTa) which are mentioning the widespread landscape of using BERT and transformers in real world day to day practice.

Most recently, after the arrival of GPT-3 the generation of text and its performance has increased manifold and we are witnessing the later models where various companies are utilizing the significant improvement after the introduction of OpenAI based model ChatGPT. The GPT-3 based model has 175 billion parameters and GPT-4 has much higher parameters than the earlier. These models are using the core architecture transformer architecture and much better enhancements in layer normalization and scaling techniques. These improvements were majorly incorporated from various technical approaches based enhancements with much diverse training data used. We were having a much larger model along with a focus on making the texts aligned with human expectations. We utilize reinforcement learning from human feedback (RLHF) based fine tuning.

There have been many companies making their own model after it, especially the large language model (LLM). These are major LLMs like Cohere, Claude, Anthropic, PaLM, Llama, LaMDA, StableLM, Falcon and Gemini. There is a lot of ongoing research finding out over the benchmarks of how these models are using ground truth-based text data evaluation and various other comprehension based metrics for better understanding on data.

4. How to Begin Using Generative AI

We must first set up our environment with the required libraries, such as PyTorch or TensorFlow, before we can begin exploring with generative AI.

We may start with installing tools like anaconda or miniconda to have practicing python as well as the packages explained above. Moreover, we may directly have python installed from python.org and also have a look at its existing documentation and setup pages too. Once installed python, we may utilize the relevant libraries for model building as well as utilize the api invocation based upon other LLMs. There are quite predominant practices of developing various Generative AI applications with the help of Langchain and Llamaindex. Want to push the boundaries of data and create entirely new things? Learn about Generative AI and other cutting-edge techniques in our Data Analytics Certification Training! This course will equip you with the skills to analyze data, uncover hidden patterns, and even generate never-before-seen content. Enroll today and become a data analytics powerhouse!

Photo by Possessed Photography on Unsplash (Free for commercial use)

Image Published on January 16, 2018